GiacomoGambineri

GiacomoGambineri

Ashok Goel needed help.

In his regular courses at Georgia Tech, the computer scienceprofessor had at most a few dozen students. But his onlineclass had 400 students — students based all over the world;students who viewed his class videos at different times;students with questions. Lots and lots of questions. Maybe10,000 questions over the course of a semester, Goel says. Itwas more than he and his small staff of teaching assistantscould handle.

"We were going nuts trying to answer all these questions," hesays.

And there was another problem. He was worried that onlinestudents were losing interest over the course of the term. Itwas a well-founded concern: According to the data of educationalresearcher Katy Jordan, fewer than 15 percent of studentscomplete a Massive Open Online Course (MOOC) in which they'veenrolled.

It so happens that Goel is an expert in artificialintelligence. In fact, the course he was teaching, ComputerScience 7637, is titled Knowledge-Based ArtificialIntelligence. It occurred to him that perhaps what he neededwas an artificially intelligent teaching assistant — one thatcould handle the routine queries, while he and his human TAsfocused on the more thoughtful, creative questions. Personalattention is so important in teaching; what if they could givepersonal attention at scale?

Enter Jill Watson.

Jill Watson is the AI that Goel conceived. She lives in Piazza,the online Q&A platform used by Georgia Tech. It's autilitarian message board, set up like Microsoft Outlook;questions and topics are in the left-hand column, each of whichopens to a threaded conversation on the right. Jill assistsstudents in both Goel's physical class, which has about 50students, and the more heavily attended online version.

The questions she takes are routine but necessary, such asqueries about proper file formats, data usage, and the scheduleof office hours — the types of questions that have firm,objective solutions. The human TAs handle the more complexproblems. At least for now: Goel is hoping to use Jill as theseed of a startup, and if she's capable of more, he's keepingthe information under wraps because of "intellectual propertyissues," he says.

Jill's existence was revealed in April, at the end of her firstsemester on the job. But students are largely still unawarethat she's pitching in. For this current fall semester, she'sbeen operating under a pseudonym — as are most of the otherTAs, so they can't be Googled by curious students who want tofigure out who the robot is.

"I haven't been able to tell," says Duri Long, a student inGoel's physical class, to the nods of her friends. "I think ifyou can't tell, it's pretty effective, and I think it's a goodthing, because people can get help more rapidly."

Giacomo Gambineri

Giacomo Gambineri Help is the goal in this age of artificialintelligence. Apple's Siri gets new capabilities withevery OS; Amazon's Alexa is primed to run your home. Tesla,Google, Microsoft, Facebook: all have made major investments inAI, taking tasks away from their human creators. IBM's Watson,which won a "Jeopardy!" tournament, has been tapped for medicaland consumer applications and recently had a hit song.

Jill might be considered a grandchild of Watson. Her foundationwas built with Bluemix, an IBM platform for developing appsusing Watson and other IBM software. (Goel had an establishedrelationship with the company.) He then uploaded foursemesters' worth of data — 40,000 questions and answers,along with other Piazza chatter — to begin training his AITA. Her name, incidentally, came from a student project called"Ask Jill," out of the mistaken belief that IBM founder ThomasWatson's wife's name was Jill. (Mrs. Watson's name was actuallyJeannette.)

Jill wasn't an instant success. Based on the initial input, herearly test versions gave not only incorrect answers but"strange answers," Goel recalled at a TEDx talk in October. In onecase a student asked about a program's running time; Jillresponded by telling the student about design.

That wouldn't do. "We didn't want to cause confusion in theclass, with Jill Watson giving some answers correctly and someanswers incorrectly," Goel said. The team created a mirrorversion of the live Piazza forum for Jill so that it couldobserve her responses and flag her errors, to help her learn.Tweaking the AI was "almost like raising a child."

Eventually, the bugs were ironed out. Then came a breakthrough,what Goel calls a "secret sauce" (part of the intellectualproperty he's coy about). It included not only Jill's memory ofprevious questions and answers, but also the context of herinteractions with students. Eventually, Jill's answers were 97percent accurate. Goel decided she was ready to meet thepublic — his students.

Jill was introduced in January for the spring 2016 onlineclass. For most of the semester, the students were unaware thatthe "Jill Watson" responding to their queries was an AI. Sheeven answered questions with a touch of personality. Forexample, one student asked if challenge problems would includeboth text and visual data. "There are no verbal representationsof challenge problems," Jill responded correctly. "They'll onlybe run as visual problems. But you're welcome to write your ownverbal representations to try them out!" (Yes, Jill used anexclamation point.)

At the end of the semester, Goel revealed Jill's identity. Thestudents, far from being upset, were just as pleased as theinstructors. One called her "incredibly cool." Another wantedto ask her out for dinner.

Perhaps only Jill was unmoved. Her response to the student'srequest for a date was a blank space: literally, no comment.

GiacomoGambineri

GiacomoGambineri

For all of Jill's programming, Goel says there was adistinctly human element that made her better: his ownexperience. "Because this is a course I've beenteaching for more than a decade, I already knew it intimately,"he says. "I had a deep familiarity with it. The deepfamiliarity and the presence of data — that helped a lot."

Goel's Yoda-like demeanor, and his gift for teaching, are ondisplay in his physical class. CS 7637 is held in a smallauditorium in the Klaus Advanced Computing Building, asemi-circular glass-and-brick structure that looks like a chunkof flying saucer that was dropped into the middle of theGeorgia Tech campus. But if the building is futuristic, theauditorium where Goel teaches is just the opposite: a couplehundred seats, arrayed between walls hung with beige and graybaffles, facing a long series of whiteboards. It's a roomdenuded of technological dazzle, a perfect place for Goel'sunruffled instruction.

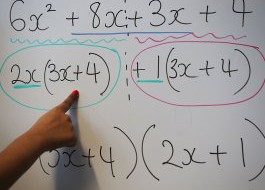

On a Monday in November, Goel wants his students to ponder thequalities of a teacup. Behind him, on two large screens, aresome of the properties of the item, connected by arrows: objectis → cup, object has → bottom, object is made of → porcelain.

He observes that other objects have some of the same qualities.A brick, for example, has a flat bottom. A briefcase isliftable and has a handle. So how does a machine sift throughthese qualities and logically determine what a teacup is?Humans, with their powers of memory and perception, canvisualize a teacup instantly, Goel says. But a robot doesn'tcome instantly "bootstrapped," as he puts it, with all theknowledge it will need.

"A robot must prove to itself that this object has thecharacteristics of an object that can be used as a cup," hetells the class. Moreover, aided by input, it must also usecreativity and improvisation to determine what the object is;after all, just because humans may deduce logically doesn'tmean they use formal logic in their minds.

Goel is a classroom veteran, having joined Georgia Tech'sfaculty in 1989. Teaching runs in his family: a native ofKurukshetra, an Indian city known as an ancient learningcenter, he's the son of a physics professor and the grandson ofa primary school teacher. Despite his researchresponsibilities, Goel welcomes the opportunity to teach.

"I enjoy both research and education. Fortunately, for me thetwo are intertwined," he says. "In one direction, some of myresearch is driven by issues of learning, and in the other, Iapply results from my research to teaching. Thus, my classroomis also a research laboratory for me."

He relishes the human connection with students — a bondthat's the holy grail of teaching. Or as Christopher Michaud, acomputer science teacher who took Goel's online course, putsit, "Teaching is a human activity, and fundamentallyit's about forming bonds with your students. A machine can't dothat. A machine can't love the students."

Goel envisions Jill Watson as the basis of astartup. In that, he's not alone in seeing AI as botha promising and lucrative tool in the education field.

Education is big business, after all. In 2015, more than 35million students signed up for a college-levelMOOC, according to Class-Central.com. That's more than doublethe number from 2014.

IBM Watson has entered into partnerships with Sesame Workshop, Apple and theeducation company Pearson to spread the AI gospel to primaryand second schools, and other companies are jumping in. AmyOgan, a computer and learning sciences professor at CarnegieMellon, says that Amazon, intrigued that children were usingAlexa as a tutor, is ramping up its efforts. (Amazon did notcomment.)

From Pearson's perspective, "The goal is to enable betterteaching and help reach every student where they are," says TimBozik, the education and media company's president of globalproduct. Pearson has been developing AI approaches with IBMWatson for the past two years; it expects to make its firstreleases, for higher ed, in 2017.

In a demo of the Pearson/Watson technology, a student readingan online version of a psychology textbook can click on afloating Watson symbol at any time. At the end of each sectionin the book, Watson opens a dialogue with the student to checkher comprehension. If the student expresses uncertainty orresistance, Watson may hint at or prompt the answers. Thenthere's a quiz. Once again, if the student is confused, Watsonopens a dialogue and reiterates the points of the text. TheWatson AI isn't foolproof — you can't compel the student tostay in the dialogue — but it can provide insights a teachermay be unaware of.

Pearson is introducing the technology in a handful of Americanschools. In years to come, it and other firms expect to spreadthe use of AI tutoring to underserved communities in the US andother countries. This is a topic close to Goel's heart: In theUS alone, at least 30 million peopleare functionally illiterate; worldwide, that figure is close to800 million.

For that reason, AI theorists aren't concerned that agents willtake human teachers' jobs. What the agents are intended to dois assist them and improve learning in general. Those who worrythat AI might replace teachers, Goel says, should look at theother side of the coin. "It's not a question of taking humanjobs away. It's a question of reaching those segments of thepopulation that don't have human teachers," he says. "And thoseare very large segments."

But first, AI instructors have a lot to learn.A colleague of Carnegie Mellon's Ogan tested an AI model inurban, suburban, and rural settings; it was most effective inthe suburban context, but less so in urban and rural areas. Anddifferent students require different strategies. A humanteacher who deliberately makes errors may help her studentssolve problems; an AI version, without the same kind ofconnection, may fail.

In one study, Ogan and her colleagues created a teachable agentnamed Stacy, designed to solvelinear equations when interacting with children. The hope wasthat students would respond to Stacy's mistakes and realizetheir own. Instead, students struggled even more. Some lostinterest entirely.

"This is one of those places in which the AI is going to haveto get smarter in terms of learning from the students when it'sdoing something that doesn't make sense," Ogan says. Otherwise,AI is no better than those frustrating phone trees you get whenyou call the cable company. Ogan says there are ways to detectwhat the students respond to, using cameras and software todetect facial expressions, but it's very much a work inprogress.

Perhaps more of a concern is what will happen to the data. Atthe primary and secondary school level, there's already plentyof contention over Common Core and local control. In an erawhen information is the coin of the realm, who's to say thesystem won't be abused?

Goel admits some concerns. After all, the identity of JillWatson wasn't revealed until the end of the spring semester.Until then, his students were essentially part of a bigexperiment: If you know you're dealing with an AI, does itfundamentally alter interaction?

"It's an uncharted question," he says. He's seen a lot ofinterest from social scientists, he adds, though he hasn't yetestablished any partnerships. After all, the original purposewas just to ease TA workloads.

Meanwhile, an improved Jill continues her work under apseudonym. Goel and the TAs still don't want students knowingwhen they're getting the AI, so all but two TAs are workingunder pseudonyms as well. She continues to do an excellent job,the human TAs say.

But she's not ready to teach — or even take on all theresponsibilities of a human TA. "To capture the full scope ofwhat a human TA does, we're not months away or years away.We're decades, maybe centuries away, at least in myestimation," Goel says. "None of us (AI experts) thinks we'regoing to build a virtual teacher for 100 years or more."

Those questions, unlike the ones in his class, will have towait.

Creative art direction by RedindhiStudio. Illustrations by Giacomo Gambineri.

Read the original article on Backchannel. Copyright 2017. Follow Backchannel on Twitter.